Introduction

In the rapidly evolving landscape of artificial intelligence (AI) and machine learning (ML), specialized hardware accelerators like Neural Processing Units (NPUs) are becoming increasingly significant. NPUs, also known as AI accelerators, are designed to handle AI and ML tasks more efficiently than traditional processors like CPUs (Central Processing Units) and GPUs (Graphics Processing Units). This blog post delves into the advantages of NPUs, their role in enhancing Android head units, and how their performance metrics, such as Tera Operations Per Second (TOPS), compare with CPU and GPU processing power.

What is an NPU?

An NPU is a specialized processor designed to accelerate neural network operations and AI tasks. Unlike general-purpose CPUs and GPUs, NPUs are optimized for data-driven parallel computing, making them highly efficient at processing massive multimedia data like videos and images, and executing neural network tasks with high throughput and minimal power consumption.

Key Characteristics of NPUs

- Specialized Hardware: NPUs are dedicated AI chips that offload AI tasks from the CPU and GPU, allowing these general-purpose processors to run more efficiently.

- High Efficiency: NPUs are designed to execute AI tasks quickly and efficiently, often with lower power consumption, making them ideal for mobile devices and edge computing.

- Parallel Processing: NPUs excel at data-driven parallel computing, which is crucial for processing large datasets and running complex neural network models.

Advantages of NPUs Over CPUs and GPUs

Higher Performance

NPUs can run neural networks faster and more efficiently than CPUs and GPUs. This is because NPUs are specifically designed for the repetitive and parallel nature of AI tasks, which allows them to deliver higher performance in these areas. For instance, NPUs can handle tasks like facial recognition, object detection, and speech recognition with minimal lag, significantly enhancing the user experience on smartphones and other devices.

Lower Power Consumption

One of the standout features of NPUs is their power efficiency. They are designed to deliver high performance while minimizing power consumption, making them suitable for energy-sensitive applications like mobile devices and edge computing. This efficiency is crucial for extending battery life in smartphones and other portable devices.

Cost-Effectiveness

NPUs can offer a cost-effective solution for AI and ML tasks. By offloading these tasks from the CPU and GPU, NPUs can help reduce the overall cost of the system while still delivering high performance. This makes them an attractive option for manufacturers looking to integrate advanced AI capabilities into their devices without significantly increasing costs.

Enhanced Privacy

With NPUs, AI computations can be performed on-device rather than in the cloud. This not only reduces latency but also enhances privacy by keeping sensitive data on the device. This is particularly important for applications like facial recognition and voice assistants, where user data privacy is a significant concern.

NPU TOPS: Measuring Performance

Tera Operations Per Second (TOPS) is a common metric used to measure the performance of NPUs. It indicates the number of trillion operations an NPU can perform per second. For example, Qualcomm’s NPU can achieve 75 TOPS, while Apple’s M3 Neural Engine delivers 18 TOPS.

Comparing NPU TOPS with CPU and GPU Performance

While TOPS is a useful metric for comparing the performance of NPUs, it is not directly comparable to the performance metrics used for CPUs and GPUs. CPUs are designed for general-purpose computing and can handle a wide range of tasks, while GPUs are optimized for parallel processing and are commonly used for graphics rendering and AI tasks. NPUs, on the other hand, are specialized for neural network operations and can deliver higher performance for these specific tasks.

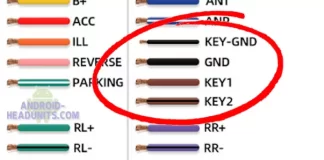

Benefits of Advanced NPUs in Android Head Units

Android head units, which are the central control systems in modern vehicles, can significantly benefit from the integration of advanced NPUs. Here are some of the key advantages:

Enhanced User Experience

With NPUs, Android head units can offer a more responsive and intuitive user experience. Tasks like voice recognition, navigation, and multimedia processing can be performed more efficiently, reducing lag and improving overall performance.

Improved Safety Features

Advanced NPUs can enhance the safety features of Android head units by enabling real-time object detection and driver assistance systems. For example, NPUs can process data from cameras and sensors to detect obstacles, pedestrians, and other vehicles, providing timely alerts to the driver.

Better Connectivity and Integration

NPUs can facilitate better connectivity and integration with other smart devices and systems in the vehicle. This can enable features like seamless voice control, advanced navigation, and real-time data processing, making the driving experience more convenient and enjoyable.

Challenges and Limitations of NPUs

While NPUs offer numerous advantages, they also come with certain challenges and limitations:

Compatibility Issues

NPUs may not be compatible with all existing AI and ML frameworks, libraries, and models, which are mostly designed for CPUs and GPUs. This can limit their adoption and integration into existing systems.

Scalability Concerns

NPUs may not be able to scale up or scale out to handle large-scale or distributed AI and ML applications, which may require more resources and coordination than a single device can provide.

Limited Flexibility

NPUs are optimized for specific types of neural network operations and may not support all the variations and types of neural networks, which are constantly evolving and expanding.

Conclusion

Neural Processing Units (NPUs) represent a significant leap forward in the world of AI and machine learning. With their specialized design, high efficiency, and cost-effectiveness, NPUs are poised to play a crucial role in the future of AI computing. By offloading AI tasks from CPUs and GPUs, NPUs can enhance the performance, power efficiency, and privacy of a wide range of devices, from smartphones to Android headunits. As the technology continues to evolve, we can expect to see even more advanced NPUs that will further revolutionize the way we interact with AI and ML applications.

In summary, NPUs are not just another type of processor; they are a game-changer in the realm of AI and ML, offering unparalleled performance and efficiency for specialized tasks. As we move towards a future where AI is ubiquitous, the role of NPUs will only become more critical, driving innovation and enhancing the capabilities of our devices.